Testing at warp speed: Why you should care about your test speed

We reduced our test run times by more than 50%, thus speeding up development and deployment considerably with the application of some generic tips that you can use in your system as well.

15-20 minute read

As you can read in our previous blog post, at AdRoll we use Erlang/OTP extensively. In particular, we use it to build our real-time bidding platform.

These systems are central to our business and that’s why we’re very rigorous about writing tests and making sure they all run smoothly in every pull request and before every deploy.

We have a fairly complex test structure which has proven useful in keeping our system maintainable. But for these tests to be truly helpful they need to run quickly because we run them all the time. Balancing test completeness and test speed is not an easy task.

In our effort to keep that balance, we have discovered quite a few things. This article describes the lessons we have learned ensuring our tests run fast enough to allow developers to work at top speed without compromising the quality of our software.

Why do you need to keep your tests running fast?

It’s not uncommon to find projects where a single test run could last well over 10 minutes. Since those test runs tend to grow steadily over time and the systems they’re testing are also huge, even when everybody knows their tests are slow, nobody notices how slow they are until it becomes critical or until a new developer comes along and has to wait forever for tests to complete for the first time. But test runs lasting more than a few minutes have serious consequences…

On Development

How many test rounds do you need to develop a new feature or fix a bug? If you practice Test Driven Development you need at least three (the initial red one, the first green one after implementing your code and the second green one after refactoring). But even if you don’t practice TDD, you will probably still want to test the code you write before submitting a pull request.

Three test runs, several minutes each, means a lot of time spent just waiting for tests to run. And more often than not while you wait for tests to run you can’t do much else. Even if you start working on something else in parallel while the tests run, context switching is generally not good for developers. Besides, your new task will most likely also require running tests.

On top of that, if you have configured your Continuous Integration tool as we have (we run a full round of tests for each pull request and for each branch merged into master), then merging even the smallest PR would take at least two full test runs – sometimes many more depending on how many PRs are open at the same time and how fast or parallelizable your CI is.

These scenarios lead to two opposite approaches to dealing with long-running tests:

- Being overly careful with your code modifications. Not touching anything that is not 100% related to what you’re implementing for fear of making a passing test fail. Behaving like this slows down your refactoring process considerably, affecting the overall code quality of the system.

- Including many unrelated changes in a single PR. The goal being to run CI tests just once and avoid waiting for many PRs to have green lights. This affects your ability to properly review, understand, and revert your changes if needed.

Code reviews are also affected since reviewers will be more wary of requesting changes if those are not explicit merge-blockers. That’s because a change request might require the author to spend another hour running tests on their machine and then CI spending the same amount of time running the tests again. Reducing code reviews to just the most blatant problems affects the culture of your team and also the overall coherence of your code, drastically reducing maintainability.

On Deployment

We have a general policy of deploying often so that in case a deployment impacts our system performance we can detect the source of the issue quickly. The idea is that since we don’t spend too much time between deploys, we are not introducing too many changes and therefore detecting the one that’s causing problems is easier. You might find yourself in a similar situation.

But before each deploy, you want to make sure what you’re deploying actually works as expected. To achieve that, you have to include at least one full test run in your deployment process. In other words, to deploy something you would at least need time for a full test run.

That process being slow can also make people overly cautious with deploys, and reduce the available timeframe for them since nobody would want to deploy anything during the last three or four hours of their day. What if the deploy goes south and you have to redeploy what you had before (i.e. rollback)? What if you have to deploy a patch? That may very well require multiple hours of work because you will need time to run tests on your computer, then CI on the corresponding PR, then your build system for the deploy. And that’s assuming there are no comments or failing tests in between and that you can find and fix the bug almost immediately.

In a nutshell, it’s not good to have long test runs. Keeping your test run times in check is very important for your system and your team. With that in mind, let me show you what we’ve learned so far…

Testing AdRoll’s RTB servers

In our system, we have multiple levels of testing:

- we write unit tests for our modules, with eUnit.

- we write integration tests with Common Test.

- we have our own tool to run black-box tests: we run client simulations against one of our servers, which are also included in a Common Test suite.

For most of our integration tests we need to mock one or more underlying pieces (particularly those that manage connections with databases, download files from the internet or connect with external services) and for that, we use meck.

A full test run would run all of the aforementioned tests, starting from the unit tests, all the way through the integration ones and finally the black box suites. For this article, we’ll forget about unit tests (which are, predictably, the fastest ones anyway) and we’ll focus on those we run through Common Test.

Benchmarking

When trying to improve test speed, the first step is always benchmarking your test runs to find which tests are taking longest. Luckily, Common Test is a very complete and flexible framework that allows us to define hooks. Using ct_hooks, we implemented our own hook module. Actually, we implemented two of them: One for regular test runs and one for benchmarking. The code within the benchmarking module looks like…

%%% @doc Common Test Hook module for benchmarking.

-module(timer_cth).

-export([init/2]).

-export([pre_init_per_suite/3]).

-export([post_init_per_suite/4]).

%% ...and all the other required callbacks

-record(state, {

time_init_suite = os:timestamp() :: pos_integer(),

time_end_suite = os:timestamp() :: pos_integer(),

time_init_testcase = os:timestamp() :: pos_integer(),

time_end_testcase = os:timestamp() :: pos_integer(),

...

}).

init(_Id, _Opts) -> {ok, #state{}}.

%% @doc Called before init_per_suite is called.

pre_init_per_suite(Suite, Config, State) ->

{

Config,

State#state{

suite = Suite,

time_init_suite = os:timestamp()

}

}.

%% @doc Called after init_per_suite.

post_init_per_suite(Suite, _Config, Return, State) ->

io:format(

"CT-TIME | ~8.3f | ~p:init_per_suite~n",

[time_diff(State#state.time_init_suite), Suite]

),

{Return, State}.

time_diff(Init) ->

timer:now_diff(os:timestamp(), Init) / 1000000.With functions as simple as the ones shown above, you can measure (and print) how much time is spent in the different stages of each test suite and test case. And if you use easily greppable strings (in this case CT-TIME) you can pipe your test run into grep and extract just the info you need for future analysis.

Lesson Learned

Use

ct_hooksto benchmark your tests and remember you can have multiplect_hooksmodules and use the benchmarking one only when you’re interested in the performance of your tests.

Mocking

When you write integration tests, it’s not uncommon to require a fair share of mocking. In Erlang, that’s usually accomplished using meck. Meck is a very flexible mocking library that allows you to temporarily replace existing modules with a new implementation for some or all of their functions. The usual lifecycle of a mock built with Meck looks something like this…

...

% initialize your mock

meck:new(your_module, [passthrough]),

meck:expect(

your_module,

a_function,

fun(Arg) -> return_something_trivial(Arg) end

),

% run your test using the mock...

% maybe check stuff with meck, for instance...

1 = meck:num_calls(your_module, a_function, [some_expected_arg]),

% destroy the mock

meck:unload(your_module),

...But if you write exactly that code in your test cases you may run into trouble. If your test fails before calling meck:unload/1, that module will remain mocked and that may affect other test cases. Depending on how you have configured your test suites, Common Test sometimes boots up different processes for different tests and sometimes it reuses the same process. By default, Meck links mocks to their creating processes, so if your process crashes or stops the mock is removed. But, if Common Test uses the same process to run the next test case, the module will remain mocked and that’s not good.

That’s why you usually want to put mocking initialization and unloading outside your main test case function and you can do that with Common Test:

init_per_testcase(the_testcase, Config) ->

% initialize your mock

meck:new(your_module, [passthrough]),

Config.

the_testcase(Config) ->

meck:expect(

your_module,

a_function,

fun(Arg) -> return_something_trivial(Arg) end

),

% run your test using the mock...

% maybe check stuff with meck, for instance...

1 = meck:num_calls(your_module, a_function, [some_expected_arg]),

ok.

end_per_testcase(the_testcase, Config) ->

% destroy the mock

meck:unload(your_module),

Config.This is also very handy if you want to mock the same module in all your tests. You just need to replace init_per_testcase and end_per_testcase function clause heads with something like init_per_testcase(_TestCase, Config) et voilà!

But… What about performance? Now that you are mocking the same modules over and over again, it is worth asking yourself if unloading and recreating them between each pair of test cases is affecting the overall time of your test runs or not.

Turns out, it is. Meck dynamically compiles a new module each time you create a mock and then it boots up a new gen_server for it (to track the history of calls to its functions, among other things). Compiling Erlang code is something that consumes several milliseconds per module (maybe even seconds, if your modules are long enough). Well then, we can just create the mocks at the beginning of our suite and unload them at the end. But if we do so, how do we avoid the issue mentioned above (namely, that modules/functions remain mocked between test cases). This is the solution we are currently using:

%% We initialize our mocks for the whole suite here.

%% Notice the no_link: we want mocks to stay with us until the end of the

%% suite, regardless of how many processes common_test wants to use.

init_per_suite(Config) ->

meck:new([module1, module2], [passthrough, no_link]).

%% We don't do much here except for those functions

%% that we want to always mock in the same way (e.g. DB connections).

init_per_testcase(_TestCase, Config) ->

meck:expect(module1, function1, 1, result1),

Config.

%% Our test cases remain the same

the_testcase(Config) ->

meck:expect(

your_module,

a_function,

fun(Arg) -> return_something_trivial(Arg) end

),

% run your test using the mock...

% maybe check stuff with meck, for instance...

1 = meck:num_calls(your_module, a_function, [some_expected_arg]),

ok.

%% We remove all expects here so that modules, even when mocked,

%% are basically _clean_ (i.e. they work as if they weren't mocked at all)

%% That's thanks to the passthrough option we provided on init_per_suite

end_per_testcase(_TestCase_, Config) ->

Modules = [module1, module2],

meck:reset(Modules),

[meck:delete(Module, Fun, Arity, false)

|| {Module, Fun, Arity} <- meck:expects(Modules, true)],

Config.

%% we do destroy all mocks here

end_per_suite(Config) ->

meck:unload([module1, module2]).A few things to notice:

- Meck doesn’t have a

delete_allfunction. That’s why we have a list comprehension inend_per_testcase. - We need to clean up module statistics as well as the expects; that’s why we’re calling

meck:reset/1. - Each call to

meck:delete/4is not cheap, but it’s way cheaper thanmeck:new/2. We only want to call it for the functions that we are actually mocking, that’s why we usemeck:expects(Modules, true)instead ofmeck:expects(Modules)(which would include the passthrough functions) orModule:module_info(exports)(which would include all the functions in the module).

Lesson Learned

If you’re going to reuse mocks on all your tests, create them on

init_per_suite, delete the expectations onend_per_testcaseand destroy them onend_per_suite.

CT Comments

Common Test comes with more than a few little known secrets. One of my favorites is ct:comment/1,2 and {comment, ""}. They let you add some context to your tests that end up in the generated HTML summary of your test runs. This lets you understand where a test failed at a glance. For instance, consider this test:

failing_test(_Config) ->

ct:comment("Dividing by zero using div should not fail"),

infinity = 1 div 0,

ct:comment("Dividing by zero should not fail"),

infinity = 1 / 0,

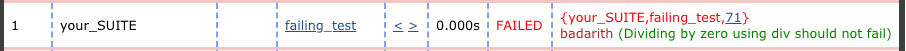

{comment, ""}.That test will obviously fail and thanks to ct:comment/1 you will get something like this in your test report:

It’s a really nice feature but, as you might be already guessing at this point, it has some performance issues. ct:comment/1,2 in and on themselves are not really that bad; they take 100ms or so. But if you write tests like the following one…

failing_test(_Config) ->

lists:foreach(

fun(I) ->

ct:comment("Dividing by ~p using div should not fail", [I]),

infinity = 1 div I,

ct:comment("Dividing by ~p should not fail", [I]),

infinity = 1 / I

end, lists:seq(100, 0, -1)),

{comment, ""}.In cases like the one above each successful run of the test uses ct:comment 200 times and that certainly adds up.

Lesson Learned

If you’re going to use

ct:comment/1,2make sure you’re not using it within a recursive function/loop.

Parallelization

Common Test lets you choose how to run your tests with groups/0. You can run tests multiple times, in sequential order, in parallel, etc. But sometimes, for instance with our black-box tests, you can’t just run all the tests in parallel to speed up the process as a whole. In our case, each of our tests is booting up a different instance of our server (with different configuration parameters) and hitting it with our client simulator to verify that it produces the responses we expect. In theory, we could run these tests in parallel, booting up as many servers as we need, etc. But in practice, that requires a lot of isolation-related effort that is just not worth it.

Nevertheless, it’s worth considering that the parallelism that Common Test gives you is certainly not the only one you can take advantage of. Check how our tests looked when we realized this:

init_per_testcase(Exchange, Config) ->

Folder = local_folder(Exchange),

download_samples_from_s3(Exchange, Folder),

boot_up_server(Exchange),

Config.

foo(_Config) ->

% run client tests against the server

...

ok.

bar(_Config) ->

% run client tests against the server

...

ok.

end_per_testcase(Exchange, Config) ->

Folder = local_folder(Exchange),

delete_folder(Folder),

tear_down_server(Exchange),

Config.As you can see, we were performing all these operations (i.e. download from s3, server start, test run, folder removal, server stop) sequentially for each testcase. Also, the file download from s3 was one of the most expensive operations in that suite. So, we refactored that code into something like this…

init_per_suite(Config) ->

download_all_samples(),

Config.

init_per_testcase(Exchange, Config) ->

boot_up_server(Exchange),

Config.

foo(_Config) ->

% run client tests against the server

...

ok.

bar(_Config) ->

% run client tests against the server

...

ok.

end_per_testcase(Exchange, Config) ->

tear_down_server(Exchange),

Config.

end_per_suite(Config) ->

delete_files().And we made sure that download_all_samples/0 downloaded as many files from s3 as it could in parallel, spawning several erlang processes and waiting for a done signal from them.

Lesson Learned

You can use

groups/0in your suites to parallelize tests, but even if you can’t parallelize whole test cases, you can still use pure Erlang to simultaneously run some parts of them.

What About You?

We found all these things in our constant effort to have quick tests and an agile development methodology. We’re sure we’re not alone here, so why don’t you let us know how you speed up your tests in the comments below?

Do you enjoy building high-quality large-scale systems? Roll with Us!